I'm actually surprised that I haven't heard this question yet. The last few months it seems everyone has been budget conscious to the point of the ridiculous. It's been difficult to convince customers that somewhere out there, real hardware still exists. BAC is supported on VMWare but due to our field experiences we cannot recommend anything less than separate dedicated DPS and Gateway hardware. Even getting virtual servers allocated has been a struggle. It seems like many organizations, in their zeal to reap the benefits of virtualization, have forgotten that under the hood there has to be sufficient hardware to actually support the number of virtual machines, especially if all the vm's are going to operate at fully capacity.

What's perhaps really surprising to me is that companies continue to put full PCs on the desks of all of their employees rather than equipping them with ($400) netbooks or even just Blackberries or iPhones. So a $5,000 server for a core business process is out, but a $1,000 PC belongs on everyone's desk, even those employees who work remotely or who only need a machine for basic word processing and email tasks.

I have to admit, I don't understand the penny-wise pound-foolish thinking about physical hardware. Four weeks ago my laptop, a two-year old Mac Book Pro, suffered a hardware failure at 5:00pm on a Friday night. I knew that it would likely be at least a week before I could get it back from Apple - and a week's worth of lost productivity would easily be more than the cost of a new machine. I barely hesitated to drop the three grand for a new laptop (out of my own pocket) yet companies with a national footprint, who need that hardware to run mission critical systems, aren't able or willing to do the same. Certainly there is a difference between the hard drive in my laptop and RAID 5 network attached storage, but nonetheless disk is cheap by all accounts. Utility computing via "cloud" providers are driving costs down as well, and really creates a situation where the argument that "we can't get hardware for this project because we don't have budget" into a non-starter. There's really no good excuse for the view that "hardware" has to be treated as a precious resource.

Sunday, October 4, 2009

Who Owns Monitoring?

Large companies are often able to support a 24x7 operations center, which make a natural place for monitoring to occur though perhaps not for the administration of those tools. Many of our smaller customers have struggled with the question of where their monitoring and its administration belongs organizationally. By default monitoring tasks generally end up the responsibility of either a few developers or with the administrators responsible for the systems in the production environment. Naturally just because something is the default doesn't make it right.

Monitoring tools generally come into the organization via three sources: operations, support, or development. How tools come into an organization can say a lot about organizational culture. For example, operations and support will generally bring in a tool because they feel constrained by the visibility they have into the production applications and environments -- they have angry customers, but no way to appease them. Developers tend to focus on monitoring tools that enable them to build better applications, but often overlook the importance of tools in the production environment. The team that brings in the tools often ends up as the administrators of the tools, regardless of whether they have the appropriate skills or resources to provide adequate support.

For our customers who are trying to make the most of restricted budgets and headcount, we've found some common trends that lead to success.

- Appoint a champion: Find someone within the organization who has adequate time, skills, and organizational knowledge to become the dedicated subject matter expert for service management.

- Define a process for monitoring and triage: Make it clear who is responsible for operational monitoring and the process for escalation.

- Administration of monitoring tools and actual the monitoring may be separate teams or personnel.

- Don't overlook support: Adding a centralized support engineering team within a support organization can streamline problem resolution.

- Defining a virtual triage team made from experts from database, networking, systems, and application teams that can be immediately activated in crisis situations is a better approach than waiting for the crisis and then taking action.

Ultimately who owns monitoring in an organization is driven by organizational needs and culture, but should be driven by a concerted decision rather than indecision.

Monitoring tools generally come into the organization via three sources: operations, support, or development. How tools come into an organization can say a lot about organizational culture. For example, operations and support will generally bring in a tool because they feel constrained by the visibility they have into the production applications and environments -- they have angry customers, but no way to appease them. Developers tend to focus on monitoring tools that enable them to build better applications, but often overlook the importance of tools in the production environment. The team that brings in the tools often ends up as the administrators of the tools, regardless of whether they have the appropriate skills or resources to provide adequate support.

For our customers who are trying to make the most of restricted budgets and headcount, we've found some common trends that lead to success.

- Appoint a champion: Find someone within the organization who has adequate time, skills, and organizational knowledge to become the dedicated subject matter expert for service management.

- Define a process for monitoring and triage: Make it clear who is responsible for operational monitoring and the process for escalation.

- Administration of monitoring tools and actual the monitoring may be separate teams or personnel.

- Don't overlook support: Adding a centralized support engineering team within a support organization can streamline problem resolution.

- Defining a virtual triage team made from experts from database, networking, systems, and application teams that can be immediately activated in crisis situations is a better approach than waiting for the crisis and then taking action.

Ultimately who owns monitoring in an organization is driven by organizational needs and culture, but should be driven by a concerted decision rather than indecision.

Friday, October 2, 2009

Duct Tape Programming

Joel Spolsky has hit the mark again with his recent blog posting "The Duct Tape Programmer" - http://joelonsoftware.com/items/2009/09/23.html. There are two key quotes "you’re not here to write code; you’re here to ship products" and “Overengineering seems to be a pet peeve of yours.” That's the premise in a nutshell -- quit over-engineering, start shipping. The only thing I fear, and some of the commentary across the net expresses this, that too many will miss his message and instead read Spolsky as justifying sloppy decisions. That's not it at all. Instead, he's taking the experienced, pragmatic approach in which could be best summarized as "just simple enough." A good read, as are his other postings.

Management by Flying Around

Being up at 4:30 am and a long drive to Portland gave me a chance to reflect on a problem I've been mulling for a while now.

For decades, thinking about business and management has been driven by sports and military analogies and experiences. The post-war generation that built the United States into the world's largest economy brought practices and organizational structures from their military experiences. Even within technology we are not immune to this. When I first saw Scrum, an "agile" method for developing software, my immediate reaction was "This is exactly like the Romans structured their military command, 2000 years ago!" We intuitively understand command-control management, work in "teams," "quarterback" meetings, and of course what executive doesn't play golf?

J9's consultants are located all across the United States - I've never met in person some of the people I work closely with, and others I see in person only rarely. The tactics commonly deployed and many of the management techniques of the past quickly fall apart when you don't have the proverbial water-cooler. The inter-personal issues -- health, relationships, personal interests -- become difficult to track and yet plenty of research has shown management empathy to personal needs as a significant factor in employee retention and job satisfaction. Career planning and reviews, especially when criticism needs to be levied, are lost when timezones and thousands of miles separate your staff.

It isn't simply a problem in personnel management either. I recall vividly the first time I saw a Gantt chart, at age 13. Those colorful bars and perfectly placed diamond milestones sparkled with their organizational efficiency. Perfection, yet completely useless if your project consists of loosely related tasks without strict dependencies, especially one where the personnel ebb and flow in and out of the project. Installing a piece of software -- there's something you can put on a gantt chart. Whether the customer has successfully developed the skills to support the software? A less well-defined task.

So here comes the summary: Companies are ever more virtualized, global, and 24x7, and it isn't just the largest companies and in the executive office that these demands appear. The management practices of the past, with their roots in industrialism, simply aren't working. I don't yet know what the answer is, but change is imminent.

For decades, thinking about business and management has been driven by sports and military analogies and experiences. The post-war generation that built the United States into the world's largest economy brought practices and organizational structures from their military experiences. Even within technology we are not immune to this. When I first saw Scrum, an "agile" method for developing software, my immediate reaction was "This is exactly like the Romans structured their military command, 2000 years ago!" We intuitively understand command-control management, work in "teams," "quarterback" meetings, and of course what executive doesn't play golf?

J9's consultants are located all across the United States - I've never met in person some of the people I work closely with, and others I see in person only rarely. The tactics commonly deployed and many of the management techniques of the past quickly fall apart when you don't have the proverbial water-cooler. The inter-personal issues -- health, relationships, personal interests -- become difficult to track and yet plenty of research has shown management empathy to personal needs as a significant factor in employee retention and job satisfaction. Career planning and reviews, especially when criticism needs to be levied, are lost when timezones and thousands of miles separate your staff.

It isn't simply a problem in personnel management either. I recall vividly the first time I saw a Gantt chart, at age 13. Those colorful bars and perfectly placed diamond milestones sparkled with their organizational efficiency. Perfection, yet completely useless if your project consists of loosely related tasks without strict dependencies, especially one where the personnel ebb and flow in and out of the project. Installing a piece of software -- there's something you can put on a gantt chart. Whether the customer has successfully developed the skills to support the software? A less well-defined task.

So here comes the summary: Companies are ever more virtualized, global, and 24x7, and it isn't just the largest companies and in the executive office that these demands appear. The management practices of the past, with their roots in industrialism, simply aren't working. I don't yet know what the answer is, but change is imminent.

Wednesday, September 30, 2009

Happy Days are Here Again

Recession? What recession? J9 is actively seeking Solution Architects. Are you an experienced consultant who understands the benefits of life at a smaller firm, where you can direct your career? Check out our posting here: http://www.j9tech.com/careers.html and apply today.

Monday, July 6, 2009

But did you do the phosphorus test?

I heard the phone clang down and my colleague Steve distraughtly mumble "She's going to kill the fish." His wife called to tell him about a phosphorus problem in their fish tank at home. She's a medical researcher, a biologist by training. Steve's first reaction when she told him there was a phosphorus problem was to ask if she had in fact done a phosphorus test. No, she said, but she'd run through all of the other chemical and algae tests, so of course it had to be the phosphorus and thus she'd started adding more phosphorus to the tank -- they'd know in a few days if that was the problem. Steve, imagining coming home to a tank of dead fish, was not impressed that his scientist wife had failed to use the scientific method at home.

It's so often like that in technology as well. Despite years of rigorous training to use the scientific method to guide our actions (it is called "computer science" for a reason), it's easy to throw all that away when faced with a challenge. A customer came to me the other day asking about monitoring tools to help with a production triage situation for a failing web service. A developer assigned to the task interrupted us saying that a fix had been deployed ten minutes prior and it looked like it was working. Let's reflect upon that:

a) No load or performance testing scripts existed for this web service.

b) No monitoring or profiling tools had been deployed with this service in either a pre-production or production setting.

c) A hopeful fix had been hot-deployed to production and left to run for a mere ten minutes before victory was declared.

d) No permanent monitoring was put in place to prevent the next occurrence of the problem.

e) Apart from a few manual executions of the service and a face-value assessment by one individual, no further validation to correlate the fix with the perceived problem occurred.

Chances are good that Steve's fish will be fine, but can the same be said for those cases where we play roulette with mission critical IT systems? Just as in the case of Steve's fish, there is no legitimate reason for a lack of objective, quantitative analysis except basic human apathy. Anyone who has ever taken a statistics course or been face-to-face with a serious production issue knows that just because many other tests have ruled out many options does not mean its safe to jump ahead and make assumptions just because of gut feeling -- why abandon a working method for one that brings doubt, risk, and exposure to criticism? Run the phosphorus test and let the results be your guide.

It's so often like that in technology as well. Despite years of rigorous training to use the scientific method to guide our actions (it is called "computer science" for a reason), it's easy to throw all that away when faced with a challenge. A customer came to me the other day asking about monitoring tools to help with a production triage situation for a failing web service. A developer assigned to the task interrupted us saying that a fix had been deployed ten minutes prior and it looked like it was working. Let's reflect upon that:

a) No load or performance testing scripts existed for this web service.

b) No monitoring or profiling tools had been deployed with this service in either a pre-production or production setting.

c) A hopeful fix had been hot-deployed to production and left to run for a mere ten minutes before victory was declared.

d) No permanent monitoring was put in place to prevent the next occurrence of the problem.

e) Apart from a few manual executions of the service and a face-value assessment by one individual, no further validation to correlate the fix with the perceived problem occurred.

Chances are good that Steve's fish will be fine, but can the same be said for those cases where we play roulette with mission critical IT systems? Just as in the case of Steve's fish, there is no legitimate reason for a lack of objective, quantitative analysis except basic human apathy. Anyone who has ever taken a statistics course or been face-to-face with a serious production issue knows that just because many other tests have ruled out many options does not mean its safe to jump ahead and make assumptions just because of gut feeling -- why abandon a working method for one that brings doubt, risk, and exposure to criticism? Run the phosphorus test and let the results be your guide.

Friday, July 3, 2009

A video speaks a thousand words

It is nothing new for us to be constantly developing new educational tools. Demos and lab materials for trainings on site, or content for our evolving KnowledgeBase that augments the HP software support we provide to our customers. But the videos are the biggest hits so far. They pack a three minute punch of information without leaning on those lazy powerpoint icons. Check 'em out.

Business Transaction Management in palatable terms (no yawning required):

http://www.youtube.com/watch?v=49tQ9BpnrT0

In case you missed the first one, here it is:

Why J9? Well, since you asked...

http://www.youtube.com/watch?v=FjPlvO01SmA

Please rate them! We'd love to get some feedback on how well these videos connect with you and for god sakes, if they are still boring, please let us know.

Thursday, July 2, 2009

How would you test a 4000 user community?

That question was the lead in to a discussion I had with a colleague this week. He had been interviewing someone for a performance testing role and that was the key question that could make or break a candidate. The typical response goes something like "I'd start with one user, then move on to five, then ten, then 50 then 100, then... all the way up to 4000." While the most common answer, this is entirely wrong. This kind of common yet broken testing process explains why the group of us that joined the conversation could each retell case studies of customers who had spent multiple years (and millions of dollars) on failed testing efforts.

The right answer goes like this:

a) Ask the hard questions

How many of the 4000 users are concurrent users and what is their use pattern? For example, many batch billing systems do nothing for 29 days per month, but then run through a massive number of transactions on the last day. Other systems have limited daily use until 5pm when their user community arrives home from work and then signs in. Are the users spread across multiple timezones?

If the data to discern the number of real concurrent users isn't available, that actually means two things to our project:

1) A separate project is needed to put in place tools to capture user behavior. The lack of such information can cause poor decisions in the areas of testing, capacity planning, security, and product usability design and functionality.

2) If no such data exists and the 4000 number simply means we have 4000 users in our database, we can now back into a more realistic upward bound through some basic calculations.

b) Functional performance test

Start with one user as a means of functional performance test. This enables you to validate your test cases and test scripts and flush out any immediate functional problems with the application(s).

c) Longevity testing, peak testing, failover testing

There are a variety of other tests with greater pertinence and validity in understanding the application's serviceability than simply running through the same script with a randomly increasing number of virtual users.

d) Load and Performance testing

If we've determined that simply starting with one user and continuing to double isn't the right process for load testing our application, then what is the right heuristic for getting to the Nth user? The answer is that it doesn't really matter, as we've determined, in effect, all of the above through the answers to our questions about the user community. If we have 4000 users in our database but don't know how and when they use the application, a test of 200 users as a top number is just as valid as a test of 2000 users. Using these numbers though, one can arrive at some guidelines by looking at the length of a user day. For example, if our application is used by an internal business customer that only works standard business hours in the eastern time zone, then we can surmise a roughly 8 hour work day, 5 days per week. Take 4000 users, divided by 8 hours, we can take an educated guess that there are 500 users per hour. Take an 8 hour day, multiply by 60 to get 480 minutes, divide the 4000 users by 480 and we can surmise that at any one minute interval there are likely to be 8 users on the system. In the absence of further information about our user community, we now have real, actionable numbers to test against. Rather than the dozens and dozens of incremental tests we were potentially facing, we can now break our cases into one user, 10 users, 500 users, and anything above that is essentially to discover the upward bound of our capacity.

These steps are a productive tool to improve the quality of your testing, as well as a great way to gain new insight into the candidates you interview.

The right answer goes like this:

a) Ask the hard questions

How many of the 4000 users are concurrent users and what is their use pattern? For example, many batch billing systems do nothing for 29 days per month, but then run through a massive number of transactions on the last day. Other systems have limited daily use until 5pm when their user community arrives home from work and then signs in. Are the users spread across multiple timezones?

If the data to discern the number of real concurrent users isn't available, that actually means two things to our project:

1) A separate project is needed to put in place tools to capture user behavior. The lack of such information can cause poor decisions in the areas of testing, capacity planning, security, and product usability design and functionality.

2) If no such data exists and the 4000 number simply means we have 4000 users in our database, we can now back into a more realistic upward bound through some basic calculations.

b) Functional performance test

Start with one user as a means of functional performance test. This enables you to validate your test cases and test scripts and flush out any immediate functional problems with the application(s).

c) Longevity testing, peak testing, failover testing

There are a variety of other tests with greater pertinence and validity in understanding the application's serviceability than simply running through the same script with a randomly increasing number of virtual users.

d) Load and Performance testing

If we've determined that simply starting with one user and continuing to double isn't the right process for load testing our application, then what is the right heuristic for getting to the Nth user? The answer is that it doesn't really matter, as we've determined, in effect, all of the above through the answers to our questions about the user community. If we have 4000 users in our database but don't know how and when they use the application, a test of 200 users as a top number is just as valid as a test of 2000 users. Using these numbers though, one can arrive at some guidelines by looking at the length of a user day. For example, if our application is used by an internal business customer that only works standard business hours in the eastern time zone, then we can surmise a roughly 8 hour work day, 5 days per week. Take 4000 users, divided by 8 hours, we can take an educated guess that there are 500 users per hour. Take an 8 hour day, multiply by 60 to get 480 minutes, divide the 4000 users by 480 and we can surmise that at any one minute interval there are likely to be 8 users on the system. In the absence of further information about our user community, we now have real, actionable numbers to test against. Rather than the dozens and dozens of incremental tests we were potentially facing, we can now break our cases into one user, 10 users, 500 users, and anything above that is essentially to discover the upward bound of our capacity.

These steps are a productive tool to improve the quality of your testing, as well as a great way to gain new insight into the candidates you interview.

Monday, June 29, 2009

OVIS to BAC migration, anyone?

J9 Technologies, Inc. Announces Migration Solution for OpenView Internet Services Users

J9 Technologies, Inc. announces a limited-time migration solution for Hewlett Packard customers currently using OpenView Internet Services (OVIS). As of December 31, 2009, current OVIS customers must migrate from OVIS to HP's BAC and SiteScope solutions in order to receive licenses, customer support, patches, and updates from HP. As a result of the Mercury acquisition, HP announced that it is dropping support for the OVIS solution in favor of the Business Availability Center (BAC) and SiteScope solutions. HP is offering, free-of-charge, a license exchange from OVIS to BAC and SiteScope.

J9 offers expert services to streamline the customer's license acquisition process, pre-migration planning, end-to-end migration from OVIS to BAC, and identifying best practices for ongoing utilization of the new BAC solution. With J9, the migration process is not just one of conversion, but evolution. J9's solution reduces a customer's time to value risk during the migration process.

J9 works with each customer individually to determine their current state, identify gaps and provide a plan for migration. Throughout the process, J9's experts partner with the customer to ensure the migration not only replaces their current state, with no gaps, but allows the customer to be positioned to expand their capabilities utilizing the enhanced BAC platform. J9's services expand beyond OVIS migration solutions to offer a complete range of basic and advanced BAC implementation services and training programs designed to enable the customer to take full advantage of the power of the BAC platform.

Business Availability Center brings a robust platform to address a broad range of application environments. BAC's rich feature-set offers customers an enhanced platform addressing their Business Service Management needs and initiatives, including application diagnostics, service level management, business transaction management, and superior dashboarding capabilities. The BAC platform is capable of supporting a wide range of application environments beyond standard web-based applications, resulting in enterprise-wide coverage for the customer's most business critical systems, such as ERP/CRM, E-Mail, SOA, Web 2.0, and client-based systems.

About J9 Technologies

J9 Technologies is a certified Hewlett Packard Gold Partner, specializing in Business Service Management and Application Performance Lifecycle management.

Offering strategic consulting, training, installation and ongoing support for HP software products such as SOA Systinet, Service Test, Business Availability Center, Diagnostics, LoadRunner / Performance Center, Real User Monitoring (RUM), TransactionVision. With a focus on application diagnostics, composite application management, and business transaction management, J9 Technologies strives to ensure that customers can quickly identify the root-cause of issues and minimize mean time to resolution.

For more information:

J9 Technologies, Inc.

24 Roy St., Box 211

Seattle, WA 98109

Tel: (866) 221-8109

Fax: (206) 374-2901

www.j9tech.com

J9 Technologies, Inc. announces a limited-time migration solution for Hewlett Packard customers currently using OpenView Internet Services (OVIS). As of December 31, 2009, current OVIS customers must migrate from OVIS to HP's BAC and SiteScope solutions in order to receive licenses, customer support, patches, and updates from HP. As a result of the Mercury acquisition, HP announced that it is dropping support for the OVIS solution in favor of the Business Availability Center (BAC) and SiteScope solutions. HP is offering, free-of-charge, a license exchange from OVIS to BAC and SiteScope.

J9 offers expert services to streamline the customer's license acquisition process, pre-migration planning, end-to-end migration from OVIS to BAC, and identifying best practices for ongoing utilization of the new BAC solution. With J9, the migration process is not just one of conversion, but evolution. J9's solution reduces a customer's time to value risk during the migration process.

J9 works with each customer individually to determine their current state, identify gaps and provide a plan for migration. Throughout the process, J9's experts partner with the customer to ensure the migration not only replaces their current state, with no gaps, but allows the customer to be positioned to expand their capabilities utilizing the enhanced BAC platform. J9's services expand beyond OVIS migration solutions to offer a complete range of basic and advanced BAC implementation services and training programs designed to enable the customer to take full advantage of the power of the BAC platform.

Business Availability Center brings a robust platform to address a broad range of application environments. BAC's rich feature-set offers customers an enhanced platform addressing their Business Service Management needs and initiatives, including application diagnostics, service level management, business transaction management, and superior dashboarding capabilities. The BAC platform is capable of supporting a wide range of application environments beyond standard web-based applications, resulting in enterprise-wide coverage for the customer's most business critical systems, such as ERP/CRM, E-Mail, SOA, Web 2.0, and client-based systems.

About J9 Technologies

J9 Technologies is a certified Hewlett Packard Gold Partner, specializing in Business Service Management and Application Performance Lifecycle management.

Offering strategic consulting, training, installation and ongoing support for HP software products such as SOA Systinet, Service Test, Business Availability Center, Diagnostics, LoadRunner / Performance Center, Real User Monitoring (RUM), TransactionVision. With a focus on application diagnostics, composite application management, and business transaction management, J9 Technologies strives to ensure that customers can quickly identify the root-cause of issues and minimize mean time to resolution.

For more information:

J9 Technologies, Inc.

24 Roy St., Box 211

Seattle, WA 98109

Tel: (866) 221-8109

Fax: (206) 374-2901

www.j9tech.com

Wednesday, June 24, 2009

J9 @ HP Software Universe 2009

We are just catching our breath after few a long and fruitful days in Las Vegas, where HP held it's annual Software Universe conference. We exhibited, we rolled out our OVIS to BAC migration offering, we chatted, we happy hour-ed, we presented, and after it all, we slept. For a long time.

And now here we are, in all of our glory, having emerged from the gambling flames of software sales and services just a little bit tougher, a little bit wiser, and a feeling lot more connected. Thanks to everyone who came by the booth (and picked up one of our lovely one-handed bottle openers pictured above) and who attended our happy hour event. It really was a blast getting to be face to face with all of these people we work with everyday. See you next year!

Monday, June 8, 2009

What are you testing?

Yet another Monday after a red-eye flight, at another new customer in some city far from home. I'm ushered into a conference rooms with a team randomly checking their watches and Blackberries, each tense, nervous, and wishing they were somewhere other than this. A project manager begins the briefing, which everyone has heard a dozen times before: the project is past its scheduled delivery, application performance is terrible, the users are angry, the sponsors are talking about pulling funding, and the order for the past month has been forced 15 hour days and weekends. This afternoon they want to begin another series of load tests and they ask me if our team of consultants will be able to help them. My only question is: What are you testing?

It's a simple question. I find myself asking it several times per day with customers and sadly the usual answer is a blank and distant stare. The reality is they don't know, but it's not their fault. To be honest, I'm not entirely clear myself on how the state of the industry reached this point. The trend though goes like this:

1) Testing happening just prior to deployment, often not even starting until the same week.

2) Tests being run by an operations team or perhaps by a lone consultant, without the involvement of a business owner who understands the functionality of the application.

3) A random mishmash of tools, some properly licensed, some not, downloaded and used without any training or guidance.

4) Test cases being made up on the fly - "Hey, what if we try this?" without any rhyme or reason as to the validity of the test.

5) Little or no written record of the test cases executed or their results, leading to duplicate efforts, errors, and a lack of objectivity.

It all really boils down to that same question -- if you can't answer, objectively, the reason for a particular test run, then your efforts are for nought. Once you are on that treadmill of test run after test run, it's impossible to get off without a hard reset; the only objective test at that point will come when the application is introduced to the real world.

Once an organization has reached this point, where production firefighting is a daily routine, the only way to bring order back is to reverse the list above. Testing must be an integrated part of any development or implementation project. Developing a written, comprehensive, repeatable test plan requires input not only from technical teams but also from end users and business analysts who understand the use cases -- both common and edge. Only then will you know what you are testing.

It's a simple question. I find myself asking it several times per day with customers and sadly the usual answer is a blank and distant stare. The reality is they don't know, but it's not their fault. To be honest, I'm not entirely clear myself on how the state of the industry reached this point. The trend though goes like this:

1) Testing happening just prior to deployment, often not even starting until the same week.

2) Tests being run by an operations team or perhaps by a lone consultant, without the involvement of a business owner who understands the functionality of the application.

3) A random mishmash of tools, some properly licensed, some not, downloaded and used without any training or guidance.

4) Test cases being made up on the fly - "Hey, what if we try this?" without any rhyme or reason as to the validity of the test.

5) Little or no written record of the test cases executed or their results, leading to duplicate efforts, errors, and a lack of objectivity.

It all really boils down to that same question -- if you can't answer, objectively, the reason for a particular test run, then your efforts are for nought. Once you are on that treadmill of test run after test run, it's impossible to get off without a hard reset; the only objective test at that point will come when the application is introduced to the real world.

Once an organization has reached this point, where production firefighting is a daily routine, the only way to bring order back is to reverse the list above. Testing must be an integrated part of any development or implementation project. Developing a written, comprehensive, repeatable test plan requires input not only from technical teams but also from end users and business analysts who understand the use cases -- both common and edge. Only then will you know what you are testing.

Thursday, June 4, 2009

This is a plea, continued...

Shortly after I wrote the last entry, about tools that get purchased and not used, I realized that I hadn't really described the reasons why this happens. Sure, there are reasons like insufficient training, inadequate tools for the problems at hand, or organizational ownership and politics. But the most common reason is far more human and harder to mitigate: apathy.

A friend of mine is a Six-Sigma and Toyota Process expert. He knows and understands quality and process control methods inside and out. I asked him about the barriers to organizational adoption of these practices. His answer was very telling. It wasn't money, time, tools, training, or anything measurable. Instead his answer was very simple -- because people don't like being told what to do.

There's a parallel here. Unless a tool, process, practice, or even idea fits within someone's world view, they simply aren't going to adopt it. It's even worse if they feel like something is being forced upon them, rather than being an engaged participant. Even if they feel invested in the tools, good old fashioned apathy can take hold.

Why? Incentives and consequences. Its just tremendously easy to become apathetic if there are no incentives or consequences to our actions. Many times after J9 consultants find a problem with a customer's application, we hear rumblings of "oh yeah, I wondered if that was a problem." Easier to turn a blind eye then to invest ones self in learning a tool, exploring a problem, and coming up with a solution. Especially since in so many organizations that live by firefighting, volunteering is akin to accepting blame for the problem.

So, the guiding points here appear to be:

1) It's imperative that all types of users are included in the selection and implementation process so that they feel invested.

2) Management has to continually communicate intentions and uphold the standard.

On this second point, here's a really example of how this can be done in a way that emphasizes the point yet stays away from simply giving orders. Say for example it's a Monday morning triage meeting and an outage that happened over the weekend is the topic. The questions isn't simply "What happened?" but also "What did the tools tell you happened?" This is a means of continuous communication about the expected tools and the expected method, plus a means of continual assessment of the value proposition -- if your tools are proving less than useful, then perhaps there is a legitimate reason why they aren't being used.

A friend of mine is a Six-Sigma and Toyota Process expert. He knows and understands quality and process control methods inside and out. I asked him about the barriers to organizational adoption of these practices. His answer was very telling. It wasn't money, time, tools, training, or anything measurable. Instead his answer was very simple -- because people don't like being told what to do.

There's a parallel here. Unless a tool, process, practice, or even idea fits within someone's world view, they simply aren't going to adopt it. It's even worse if they feel like something is being forced upon them, rather than being an engaged participant. Even if they feel invested in the tools, good old fashioned apathy can take hold.

Why? Incentives and consequences. Its just tremendously easy to become apathetic if there are no incentives or consequences to our actions. Many times after J9 consultants find a problem with a customer's application, we hear rumblings of "oh yeah, I wondered if that was a problem." Easier to turn a blind eye then to invest ones self in learning a tool, exploring a problem, and coming up with a solution. Especially since in so many organizations that live by firefighting, volunteering is akin to accepting blame for the problem.

So, the guiding points here appear to be:

1) It's imperative that all types of users are included in the selection and implementation process so that they feel invested.

2) Management has to continually communicate intentions and uphold the standard.

On this second point, here's a really example of how this can be done in a way that emphasizes the point yet stays away from simply giving orders. Say for example it's a Monday morning triage meeting and an outage that happened over the weekend is the topic. The questions isn't simply "What happened?" but also "What did the tools tell you happened?" This is a means of continuous communication about the expected tools and the expected method, plus a means of continual assessment of the value proposition -- if your tools are proving less than useful, then perhaps there is a legitimate reason why they aren't being used.

Saturday, April 25, 2009

This Is A Plea

This is a plea: please, please use the tools you've invested in. Nothing makes a J9 consultant sadder than to see customers throw their money away on tools and training. Second to that is to see customers try to build tools on their own when there are other options available.

Two cases in point here: HP Diagnostics and HP TransactionVision.

The Diagnostics product has been around for many years, coming into the HP fold in January 2007 as part of the acquisition of Mercury Interactive. Many customers are resistant to using this product, believing either that its overhead is extreme or that free/cheap tools can provide the same information. The truth is, Diagnostics adds very minimal overhead to any production system if it is configured correctly. It has been safely deployed in high-volume production settings to dozens and dozens of J9's customers. Many free or inexpensive tools offer point solutions, but none are capable of providing the complete environment monitoriing and historical data collection capabilities of Diagnostics.

Many customers have recently shared with us that they are so desperate for business transaction reporting and monitoring that they are considering building their own solutions. TransactionVision came to HP via an acquisition of Bristol Technologies, which had been a partner of Mercury Interactive. When we demonstrate it, the feedback is almost always a sense of relief "Thank goodness I don't have to build this for myself!" Sensors are deployed for Java, .Net, WebSphereMQ, CICS, and Tuxedo, with reporting and monitoring integrated into HP Business Availability Center.

So, please, don't build what you can buy, and if you are going to buy, please don't let it become shelfware.

Two cases in point here: HP Diagnostics and HP TransactionVision.

The Diagnostics product has been around for many years, coming into the HP fold in January 2007 as part of the acquisition of Mercury Interactive. Many customers are resistant to using this product, believing either that its overhead is extreme or that free/cheap tools can provide the same information. The truth is, Diagnostics adds very minimal overhead to any production system if it is configured correctly. It has been safely deployed in high-volume production settings to dozens and dozens of J9's customers. Many free or inexpensive tools offer point solutions, but none are capable of providing the complete environment monitoriing and historical data collection capabilities of Diagnostics.

Many customers have recently shared with us that they are so desperate for business transaction reporting and monitoring that they are considering building their own solutions. TransactionVision came to HP via an acquisition of Bristol Technologies, which had been a partner of Mercury Interactive. When we demonstrate it, the feedback is almost always a sense of relief "Thank goodness I don't have to build this for myself!" Sensors are deployed for Java, .Net, WebSphereMQ, CICS, and Tuxedo, with reporting and monitoring integrated into HP Business Availability Center.

So, please, don't build what you can buy, and if you are going to buy, please don't let it become shelfware.

Monday, April 20, 2009

When Java Memory Issues aren't.

What I'm going to write is likely an unpopular viewpoint, but one that needs to be expressed. One of the most frequent sentiments expressed by customers when we are doing an application analysis is that their Java application is suffering from crippling memory issues. I'm not going to go on record saying that memory issues don't happen in Java -- I'm quite certain that there are situations where legitimate memory management issues within applications and the virtual machine happen. But, a more common scenario is something like customers placing very large objects into user http sessions, then being surprised when their application server slows to a crawl and crashes out of memory. Guess what? That's not a memory issue, that's a poor architecture and design issue.

Normally about this time I hear a chorus of "but, but, but! Why isn't the VM managing my memory correctly?!?!?!" It's a virtual machine, not a miracle worker. In the case above, the http session is being abused and overused. There are several options to that particular problem, depending on the business requirements:

a) Reexamine necessity of all data being stored in the session and reduce.

b) Move much of the data to a separate cache with more granular control over cache clear/timeout strategy.

c) Externalize session data entirely to a separate user session server with purpose-built hardware and cache timeout strategy.

All of the above are overkill you say? Sure beats running out of memory it seems to me. About this time I often hear someone pipe up about "Why don't we just force garbage collection?" or some similar pearl of wisdom, such as explicitly finalizing objects. Both are flawed -- you'll recall that the vm specification states that marking an object to be finalized does not explicitly force it to be finalized on any particular schedule, and any suggestion of forced garbage collection is specifically not recommended. Generally the response I hear to this is some grumbling about a lack of control over memory management. Therein lines the rub: If you need explicit memory management due to the nature of your requirements, then Java and .Net are not for you. And, as demonstrated above many issues that at first appears to be memory related are in fact not.

Normally about this time I hear a chorus of "but, but, but! Why isn't the VM managing my memory correctly?!?!?!" It's a virtual machine, not a miracle worker. In the case above, the http session is being abused and overused. There are several options to that particular problem, depending on the business requirements:

a) Reexamine necessity of all data being stored in the session and reduce.

b) Move much of the data to a separate cache with more granular control over cache clear/timeout strategy.

c) Externalize session data entirely to a separate user session server with purpose-built hardware and cache timeout strategy.

All of the above are overkill you say? Sure beats running out of memory it seems to me. About this time I often hear someone pipe up about "Why don't we just force garbage collection?" or some similar pearl of wisdom, such as explicitly finalizing objects. Both are flawed -- you'll recall that the vm specification states that marking an object to be finalized does not explicitly force it to be finalized on any particular schedule, and any suggestion of forced garbage collection is specifically not recommended. Generally the response I hear to this is some grumbling about a lack of control over memory management. Therein lines the rub: If you need explicit memory management due to the nature of your requirements, then Java and .Net are not for you. And, as demonstrated above many issues that at first appears to be memory related are in fact not.

Friday, April 17, 2009

Don't be held hostage by the BOfH

The Bastard Operator from Hell stories (http://www.theregister.co.uk/odds/bofh/) have delighted IT personnel since the early 1990's with their comic view of the power of systems administrators and their place in corporate America. For those not familiar, the basic premise is that of a renegade system administrator who makes mischief on systems and users as he pleases. The real world version is unfortunately not as amusing as the comic.

Years ago I worked at a company held hostage by such an individual. As systems crashed at random intervals, enough peculiarities came to light to make it apparent that this individual took some strange delight at causing disasters that he could then "rescue" as a way of self-promotion. Sadly in that organization it worked for a long time, mostly out of fear. After all, this BOfH was of course the only one who understood how much of the network was configured, the only one who knew various passwords, and other assorted bits of knowledge. Past attempts to involve others had only increased the number of "surprises" which further increased the dependence.

This issue is particularly relevant right now as tough economic times cause companies to scale back. Cutbacks, in the form of business activities, training, or personnel, can cause key knowledge to consolidate into a few essential personnel. This introduces tremendous organizational risk can be harmful to both the staff and the company as a whole in the following ways:

1) Makes it impossible for those individuals to take sick days or vacation, increasing the potential for burnout.

2) Puts the company at the mercy of those individuals in accomplishing work.

3) Breeds animosity through the creation of a "two-tier" team where some are "in the know" and others are not.

4) Creates a priority mismatch where the agenda of a few can trump the priorities of the team and company.

5) Contributes to organizational churn as resources are directed toward firefighting.

The best way to manage this is simple: take strides to counter it and build a culture that encourages openness rather than firefighting and heroics. Build in knowledge sharing as personnel evaluation criteria. Assess the resource needs of your teams in order to ensure adequate staffing and tools. And don't be a victim of the BOfH.

Years ago I worked at a company held hostage by such an individual. As systems crashed at random intervals, enough peculiarities came to light to make it apparent that this individual took some strange delight at causing disasters that he could then "rescue" as a way of self-promotion. Sadly in that organization it worked for a long time, mostly out of fear. After all, this BOfH was of course the only one who understood how much of the network was configured, the only one who knew various passwords, and other assorted bits of knowledge. Past attempts to involve others had only increased the number of "surprises" which further increased the dependence.

This issue is particularly relevant right now as tough economic times cause companies to scale back. Cutbacks, in the form of business activities, training, or personnel, can cause key knowledge to consolidate into a few essential personnel. This introduces tremendous organizational risk can be harmful to both the staff and the company as a whole in the following ways:

1) Makes it impossible for those individuals to take sick days or vacation, increasing the potential for burnout.

2) Puts the company at the mercy of those individuals in accomplishing work.

3) Breeds animosity through the creation of a "two-tier" team where some are "in the know" and others are not.

4) Creates a priority mismatch where the agenda of a few can trump the priorities of the team and company.

5) Contributes to organizational churn as resources are directed toward firefighting.

The best way to manage this is simple: take strides to counter it and build a culture that encourages openness rather than firefighting and heroics. Build in knowledge sharing as personnel evaluation criteria. Assess the resource needs of your teams in order to ensure adequate staffing and tools. And don't be a victim of the BOfH.

Friday, April 10, 2009

Why J9?

Check out our new video! It'll take 3 minutes or less, we promise.

http://j9tech.com/whyJ9.html

Or check us out on youtube:

http://www.youtube.com/watch?v=FjPlvO01SmA

To keep up to date on new our videos and other new developments here at J9, shoot us an email at info@j9tech.com.

http://j9tech.com/whyJ9.html

Or check us out on youtube:

http://www.youtube.com/watch?v=FjPlvO01SmA

To keep up to date on new our videos and other new developments here at J9, shoot us an email at info@j9tech.com.

Reducing the Operational Costs of SOA

Introduction

Software evolution has taken us a long way from the mainframe to distributed computing in the cloud. What hasn’t changed is the need for operations teams to effectively manage the applications and infrastructure that businesses and organizations rely on. In this article, we will discuss how to effectively manage these composite/distributed/SOA applications by leveraging the existing expertise of operations teams along with tools and methodologies that ensure high availability, reduce the impact of outages, and cut out the reliance on costly all-hands bridge calls. In addition, we’ll give a few examples of how other organizations have adapted to these new software architectures and steps taken to prepare for them before they landed in production. Lastly, we’ll cover the cost of not doing anything and how that price is paid elsewhere.

Case Study: Cable Nation

The challenges for today’s operations teams are increasing exponentially, given the number of physical and virtual hardware layers that are used to provide a single piece of functionality. One fictional company that I’ll refer to throughout this article is a cable company called Cable Nation. Cable Nation used to be a small, regional cable provider with a single billing system that was hosted on a single mainframe system. At it’s infancy, the health of the billing system could be determined by the sole operations engineer who could log into the mainframe and check the vital system stats – CPU, memory, disk, IO rates and a few other key metrics that were hardly ever out of whack. Today, the billing system for Cable Nation stretches across multiple physical machines, virtualized devices, across many internal data centers and integrating with multiple external vendors. This rise in complexity solves many business-related issues involving disparate accounting methods across subdivisions, access to customer account information across organizations and ensuring that some of this data is available to external business partners. The architecture chosen to meet these new challenges is a SOA architecture.

Nowadays, tracking down a particular issue with the billing system involves a lot more servers, databases, applications, services and multiple mainframes. As a functional unit, the billing system continues to provide many of the same functions that it always has, but now it is much more difficult to find the root cause of a problem due to the distributed and complicated nature of this system. In many cases, the application architects are brought in to help resolve critical production issues, primarily because the operations teams are ill-equipped with their system monitoring tools to address what are now issues that can only be identified and isolated using application monitoring tools. In this paper, we will discuss some of the fundamental differences between the systems versus application management approaches and some of the tools and techniques that enable operations teams to more effectively manage these new systems with the same level of adeptness as they were able to when these systems were less complex.

In the broadest sense, the problems encountered in these distributed systems are tractable, and identification of the root cause of an issue is largely deterministic, based off of the capture of a few key metrics or key performance indicators (“KPIs”). In many cases, finding the source of a software problem may be tracked down to a single web service, operating on a specific machine, hosted in one physical data center, administered by a person who is responsible for the overall uptime of that service. However, companies like Cable Nation pay a huge price in terms of the number of hours spent by engineers on bridge calls, development tasks being delayed, or lost customers due to the inability to service them in a timely manner. This doesn’t even count the additional applications that depended on that single service that failed during the down time or the cost of missed external SLA’s tied to real dollars. Usually, these types of scenarios can be reduced with the appropriate amount of testing, but in many cases, the cause of downtime can be as trivial as a hardware upgrades or the application of a patch that causes unintended side effects. At the end of the day, there are always going to be problems in production and reasonable measures must be taken to alleviate the cost of those errors.

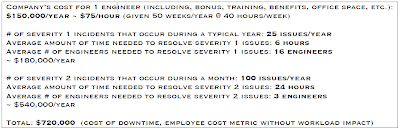

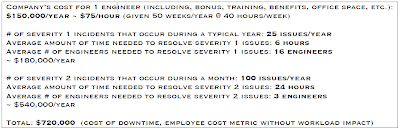

In essence, the primary cost of decreased availability and performance is due to the time and # of resources needed to resolve a problem. Consider this relatively conservative equation:

In this case, we’re looking at ¾ of a million dollars just for 125 issues!!! Imagine how quickly this number increases when you double or triple the # of incidents. Note that this was also a very conservative estimate. In many companies, the # of engineers involved in severity 1 & 2 issues is much higher – I’m sure many of you can relate to hanging out on bridge calls until a problem is resolved. Imagine how much money would be saved if the # of engineers needed was cut in half or the mean time to repair (“MTTR”) was reduced even by 25%? This is the magnitude of the problem and in this particular scenario, we’re considering that the # of incidents stays the same. In other words, there are some processes that we can put in place to reduce the potential # of incidents, but we’ll talk about those separately. The key to this is to recognize that the cost impact just in terms of people and their time is a large part of what we’d like to address as a part of this discussion.

In the case of Cable Nation, executive management had already recognized this cost was eating away at their ability to do business, in terms of both time spent resolving issues, but also in diverting time away from new development projects. In order to keep these costs under control and adhere to their hiring freeze, they began looking for tools that could address the main 2 factors they had control of:

* Reducing the time involved in resolving issues

* Reducing the # of people involved in issue/problem resolution

Taking a top-down approach, the best means of reducing the time to resolve problems isn’t simply getting more people involved, but rather arming a few key individuals with the appropriate quality and quantity of data that allows them to quickly pinpoint, triage, escalate, or resolve the problems in a matter of minutes. In the case of distributed applications, the sheer # of metrics that must be tracked is daunting in and of itself, but when those metrics and their relevance changes due to a few simple software modifications, then the ability to triage issues is compromised greatly. In the case of Cable Nation, the # of unique web services rolled out on a single release was measured in the 100’s initially. Only the developers and a few of the QA engineers knew how and when these services communicated with both internal and external systems. This dependence on the knowledge of developers and QA teams kept them constantly in the mode of fighting fires and taking on the role of operations staff due to the inability to track down issues using the same old methods. While the first release of the SOA re-architecture was deemed success, the second release was delayed multiple times, primarily because development was constantly brought in to resolve production outages and performance issues. The frustrating part for the developers was that they produced almost exactly the same # of bugs that they had when developing standalone (non-SOA) applications. The main difference was that the operations teams were ill-equipped to handle the complexities and issues associated with this new distributed architecture and so they were often unable to help solve problems.

So how do you prevent the scenario that Cable Nation faced? In other words, how do you arm operations with the effective tools and expertise to manage a SOA-based architecture? We will now begin to answer these questions and address the costs associated with not taking the appropriate steps…

Understanding MTTR

I had mentioned earlier the concept of Mean Time to Resolution, sometimes called Mean Time to Repair – luckily both can use the MTTR acronym. In the problem resolution process, there are actually 2 phases – problem identification and then the problem repair. In many cases, the identification phase is the most difficult. Usually, the symptoms of a particular issue may be similar to another that had been encountered, but often it turns out that the root cause is vastly different. Take the following example: “The database appears to be slow” is the issue identified, but the root cause may be due to a number of factors: network I/O, disk I/O, missing indexes, tablespace locations, SAN storage, etc. The key to effectively reducing the mean time to identification (“MTTI”) consists of having the best data and a person equipped with minimal training to interpret the data. For instance, in the case above, the MTTI could be reduced if the tier 2 support engineer was armed with an alert or report that tracked the performance of a single database query over time and was able to spot an increase in time. The escalation then would be handed off to the DBA who would tweak the execution plan for the query. All of this proactive identification and resolution may have very well taken place without having to raise a severity 1 or severity 2 incident.

Unfortunately, in complex software systems, humans are often the weakest link in determining the root cause of issues. In the best case scenario, all relevant system health and metric data would flow to a single engine that was able to rank, sort, categorize and analyze the data until the problem was detected. Once the issue was found, all the operator would have to do is press a big red button that made the problem go away once and for all. In reality, the big red button doesn’t exist, but there are many tools that provide the operations engineer the data that wil allow him to resolve the issue on his own and with in a fraction of the time it would normally take.

So What Makes SOA Management Different?

You’ll note that we’ve steered clear of the SOA terminology mostly through the previous paragraphs. From the perspective of the operations engineer, whether the application uses web services or adheres to a SOA architecture is not nearly as relevant as understanding the differences between system and application management. In other words, while it’s advantageous for operations teams to understand some fundamentals of SOA, it is not necessary for them to be experts in the lowest level details other than how problems should be identified and resolved. One example of this is the use of web services security – the operations team may need to know that there are web services that help govern the security of the SOA system and that there are key metrics that indicate whether or not the authentication system is operating at it’s optimal capacity (which is a combination of CPU utilization on the authentication web service, the # of concurrent authentication requests and an optimal connection pool to the authentication database). Operations engineers do not need to understand the specific authentication algorithms used or the fact that XML is the primary on the wire protocol. It is these differences that separate operations from development, QA and the business. At some point, the well-formedness of the XML or a buggy authentication algorithm may be identified as the root cause of an issue, but ideally the operations teams have ruled out all other problems before handing it off to the R&D engineers to investigate. Hopefully, the tools have also captured the information necessary to resolve these lower-level problems as well.

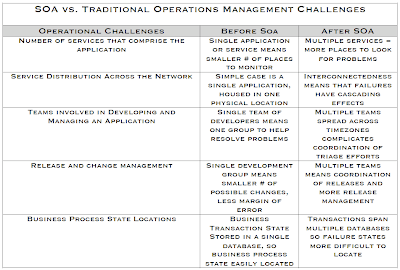

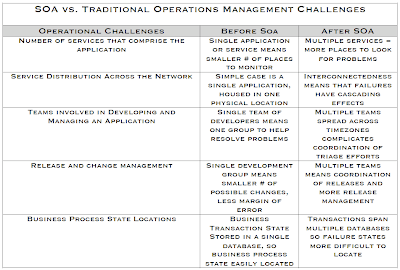

The differences between SOA/distributed systems management can be described primarily as a function of the # of unique services and the distributions of those services across the network. In addition to the distributed nature of the applications, the traditional application teams boundaries are a challenge as well. This can complicate the resolution of an issue because there are often more than one distinct development groups that develop a single “application”. This makes SOA application development quite different from more traditional development, and this has a downstream effect on how these applications are managed. The differences in SOA management versus more traditional non-distributed applications is summarized in the following table:

In order for operations teams to deal with these issues effectively, there are a few strategies that we’ll cover in the next section.

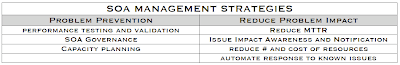

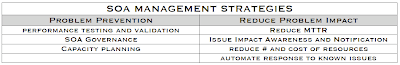

Developing a SOA Management Strategy

At the heart of any SOA management strategy, is the recognition that loose coupling brings along with it an increased amount of management. The traditional “application” may consist of a single web page along with two web services, or it may stretch across multiple service layers, consisting of both synchronous and asynchronous requests. The view for operations teams into these varied application topologies should be simple and consistent, so that when a performance or availability issue occurs, the exact location (physical, virtual, and relative to other services) can be identified along as the impact it has on other applications. The key elements of a cost-effective management strategy for SOA consists of the following elements:

Problem Prevention – Performance Testing

Obviously, the best way to resolve issues is to prevent them from occurring in the first place. The 1-10-100 rule should always be considered – it costs $1 to solve a problem in development, $10 to solve the same problem in QA, and $100 to solve it in production. To ensure that operations teams are ahead of the curve, the application and QA teams need to determine the Key Performance Indicators that are relevant to the operations teams. In many cases, this determination is a collaborative effort between QA, operations and development teams.

The primary need to identify KPI’s is to reduce the total # of potential metrics that an operations engineer is responsible for tracking and understanding. In some cases, this is a best guess based on performance data ascertained during performance testing, and in other cases this KPI data can simply be extracted by thumbing through help desk tickets, incident and problem logs, or simply by interviewing individual developers to identify components and/or functionality that may be cause for concern. At the end of the day, teams that conduct extensive testing not only root out problems before they creep into production, but as a side benefit – it helps to narrow down a core set of metrics that operations teams can utilize to track the health of the application in production.

In addition to manually identifying KPI’s, many application monitoring tools have out-of-the-box configurations that vastly reduce the time and effort needed to identify these KPI’s. For instance, HP Diagnostics monitors JVM heap memory, and web service request latencies without requiring developers to provide hooks or logging code to identify issues.

Here’s a few steps that can be used to start you down the road towards preventing issues before they crop up in production:

1. Establish baseline for production application KPI’s

2. Utilize the baseline and KPI’s for conducting realistic performance tests

3. Feed KPI’s back to development and architecture teams for use in planning additional system enhancements

Problem Prevention – SOA Governance

One of the most effective way of reducing production headaches is through the implementation of an effective SOA governance strategy. In the more traditional sense, SOA governance is largely concerned with providing a contract between the business and the IT organizations. The implementation of a governance strategy ensures that the business remains in control of it’s own destiny, instead of handing the reigns over to IT, crossing their fingers and hoping that the solutions provided meet the ever-changing demands of the business.

So what does an operations team care about governance in the first place? Well, the impact of a good governance strategy insures that the SOA architecture is designed to reduce the number of applications and services needed to meet the business demands. Reduction in the number of services needed to provide more or less the same functionality may halve the number of potential points of failure in a SOA-based architecture. Reuse of services is a key component to effective governance, but this reduces the overall number of potential failure points for the applications – in a sense more bang for the buck.

In addition to reducing the number of potential services deployed across an organization is also the insurance of setting policies on those services so that they behave as expected. For instance, setting policies that restrict or limit access to certain services ensures that web services aren’t utilized outside their defined scope. This level of problem prevention and runtime governance of services can be accomplished using HP’s SOA Policy enforcer. The importance of policies can’t be more relevant when operations engineers are faced not only with the uptime management of services, but of the legal and business requirements that they remain compliant.

Problem Prevention – Capacity Planning

Another effect a SOA governance strategy has on operations is by informing of additional services that will be deployed in subsequent releases so that operations teams can make the best use of existing resources or gain enough lead time to plan for additional capacity. Services definitions include the anticipated quantity of requests expected and combining this with the service monitoring tools will ensure that hardware can be allocated in sufficient quantity to serve additional service demands.

Additional runtime metrics obtained by SOA management tools like HP’s BAC for SOA gives operations and developers realtime performance data that can be used to identify bottlenecks, thereby providing capacity where it was previously constrained.

Reduce Problem Impact – Reduce MTTR/MTTI

As we saw earlier, the cost of reducing MTTR is measured in the millions of dollars for even a modest number of issues. Any steps that can be taken to reduce the time to identify and resolve a problem will drastically reduce the cost to the business. In the case of SOA applications, the need to proactively monitor and alert on problems gains additional significance due to the greater potential for a single service to effect a larger number of applications. The more difficult challenge for the operations teams is simply to understand and be able to visualize where to begin tracking down problems.

The first step to reducing MTTR is to do everything possible to identify problems before they become critical. Reducing a problem from a severity 1 issue to a severity 2 issue is one of the key practices employed by successful operations teams. There are 2 means of helping to identify problems before they raise in severity:

* Active monitoring – to help identify issues before end users experience them

* Passive monitoring - with trend-spotting capabilities

Active monitoring is accomplished primarily through the use of simulating end users, or in the case of web services – service consumers. These business process monitors (“BPM”s) regularly perform requests that occur on a daily basis. These monitors may be tied into a deep-dive monitoring tool that provides end-to-end visibility of where bottlenecks occur or where there are service interruptions by tracking every network byte sent and all the way down to the line of code executed by the web service. HP’s BAC for SOA software provide this top-down monitoring capability, allowing operations to be notified as soon as a service begins misbehaving. Once the service is identified and metric snapshot is taken (via HP Diagnostics for SOA), the operations staff has the information necessary to pinpoint the root cause of the issue or provide high quality metrics to a tier 2 or tier 3 engineer for additional troubleshooting.

Passive monitoring along with trend analysis can help to identify problems that emerge over time. For instance, the auto-baselining feature of HP BAC along with the Problem Isolation module informs the operations team when there is an aberration in the metrics. For example, a metric that captures the response time for a web service could be auto-baselined and as the service response time starts to degrade (due to higher request volume or latency in it’s database requests), then an alert would be sent to the operations team for investigation.

The shift for operations teams in managing SOA applications is that many of the existing tools used to chart performance and availability simply don’t work as well in a distributed/SOA-based architecture. In even the simplest of SOA architectures, the path a transaction follows is largely dependent on the data contained in the request. Services route requests to the appropriate recipients and so when errors occur or transactions fail to complete, the determination of where the failure occurred often requires knowing what individual user sessions initiated the request and what the request contained. Because the data is split across multiple service layers, the ability to trace a specific request is reduced unless tools that provide end-to-end transaction tracing are employed.

Adding to the complexity, many SOA-systems consist of multiple vendor’s SOA application servers and messaging solutions. Any tool used to monitor these systems must take into account the heterogeneous nature of these systems and still present a common view to the operations teams. No matter how good an engineer may feel their skills at problem solving may be, the challenges presented by a vast array of web services infrastructure is daunting, even to the seasoned pro. The worst case scenario is for every system to be monitored by a different vendor-dependent tool and so problem identification is hampered because they are constrained to their own environment and don’t capture the interactions between system boundaries, which is the place where issues most often occur.

In this case, the operations teams need a single pane of glass into this new layer of application architecture. Tools such as HP’s Diagnostics for J2EE/.NET/SAP can provide service and application-level metrics along with automatically discovering application topologies by tracing requests across system boundaries. Tools like HP’s TransactionVision provide even deeper insight into the data-dependent routing of requests through heterogeneous SOA-based architectures by capturing events spanning J2EE containers, .NET CLR’s, MQ queues, Tuxedo systems, or even CICS or IMS calls. Knowing exactly where a fault occurs is one of the biggest issues with SOA applications. If operations teams are provided the appropriate tools, then the tried and true operations procedures begin to kick in. Otherwise, developers and QA staff will continue to be pulled into every issue and instead of empowering operations with the tools and processes to manage these new SOA applications, operations will be handled by developers and no one will be left minding the store.

Reduce Problem Impact – Issue Impact and Awareness

Due to the inherent interconnectedness of SOA-based architectures, the need to reduce the impact of outages has risen in importance. The additional dependencies between applications and services require operations teams to understand the effects configuration changes have on dependent services. Though the use of application topology discovery and service dependency mapping, operations teams can isolate the impact that changes have across all applications dependent on a single service.

In terms of cost, operator configuration changes account for upwards of 60% of unplanned application downtime. In the case of SOA applications, this impact can stretch higher when operators and configuration managers are unaware of service dependencies. Though great pains my be taken by the SOA governance teams, the ability to quickly detect service changes and additional consumers can result in additional downtime due to the unexpected consequences of change. In order to restore service to a malfunctioning web service, operations teams need to have the full picture of which services upstream or downstream will be effected by an upgrade or change so that adequate measures can be implemented to reduce service disruption.

Once again, tools are essential to map dependencies between systems, especially those that keep the models up to date on a minute-by-minute basis. HP’s Dynamic Discovery and Dependency Mapping (“DDM”) and TranscationVision software tools empower operations teams with a clear picture of service dependency and allow them to determine the moment a transaction is failing to complete whether due to a bug in the code or due to a malformed request. Visualization allows operations teams to proactively notify the businesses when an outage occurs so that they can take the appropriate steps to work around the problem. This level of interaction with the business ensures a positive dialog with the IT organization.

Reduce Problem Impact – Reduce # and Cost of Resources